“Go forward 45 feet,” the robotic voice said. “Stairs at 12 o’clock.”

Bruce Howell, with a baseball cap on his head, sunglasses over his eyes, and a white cane in hand, followed the directions leading him out of the Boston Design Center toward a waiting Motional automated vehicle.

“Follow curb. Turn left in six feet,” the voice said. “Crosswalk detected. Proceed with caution.”

Once across the street, Howell swiped his finger across his smartphone’s screen. The vehicle’s rear passenger door unlocked. Using a couple more verbal cues, Howell was able to find the vehicle, open the door, fold up his cane, and sit himself down in the backseat.

The voice guiding Howell, who is blind, belongs to a wearable, AI-powered navigation assistance system created by researchers at Boston University. And the vehicle it guided him to was a prototype of a Motional robotaxi, which sports several features specifically designed to increase accessibility for those who are blind or have low vision.

Bruce Howell, who is blind, helped test a wearable navigational assistance device developed by a Boston University research team that used AI to help direct him to a nearby Motional AV.

“Finding the car is an extremely challenging problem for blind individuals,” said Felice Ling, a lead user experience researcher for Motional. “We can provide features that help, like an app button that makes the vehicle’s horn honk, but we need a separate solution to come into play long before the person is in the vicinity of the car. The Boston University team has a very interesting solution that pairs well with the features that are in our robotaxi.”

Boston University’s OpenGuide team developed the system as part of the U.S. Department of Transportation’s Inclusive Design Challenge, an initiative created to encourage the autonomous vehicle industry to integrate features that promote accessibility early in the development cycle.

Mobility assistance is a really important component in ensuring access to a good quality of life.”

said Eshed Ohn-Bar, an assistant professor of electrical and computer engineering who heads up the OpenGuide team. “If you were to choose a problem that has the most bang for the buck, mobility assistance is one of the grand challenges I’d like to see resolved.”

The OpenGuide team is one of 10 national semi-finalists. The winning team, which will receive $1 million in funding to continue the research, will be announced later this summer.

Designs that improve life quality

During the project, the OpenGuide team also partnered with The Carroll Center for the Blind, a Newton-based non-profit that provides services to those with visual impairments.

"This partnership was exciting because we share a common mission: using technology to increase accessibility and independence," said Eryk Nice, Motional’s vice president of technology strategy. “We are each trying to solve one piece of the bigger picture, and we truly enjoyed the opportunity to pair the OpenGuide team's vision for the future with what we are doing to make our robotaxi accessible to riders with disabilities, today.”

Accessibility is one of Motional’s core focus areas, alongside safety and reliability. During development, Motional has worked with groups such as the Institute for Human Centered Design to incorporate features for different physical and mental needs into the vehicle, which is based on Hyundai’s IONIQ 5/

“When designing a product, we don't want to just make something that is cool or high-tech, we want to make sure our designs actually improve people's lives,” said Ling. “That's what's critical to the success of any product.”

Motional worked with people who are blind or have low-vision to develop features that help improve driverless rideshare accessibility. These features include a button on an app that triggers the vehicle's horn, making it easier to find.

Howell, who works for the Carroll Center, was one of several blind individuals who participated in the project. While technology has made it easier to summon rideshares, those who are blind or have low vision can still have difficulty getting streetside and then identifying the vehicle, especially on a busy city block.

“The driver doesn’t always pull up in front of you. So how do you make that final connection?” he said. “Anything that lets me be more independent and make that final connection is a critical piece.”

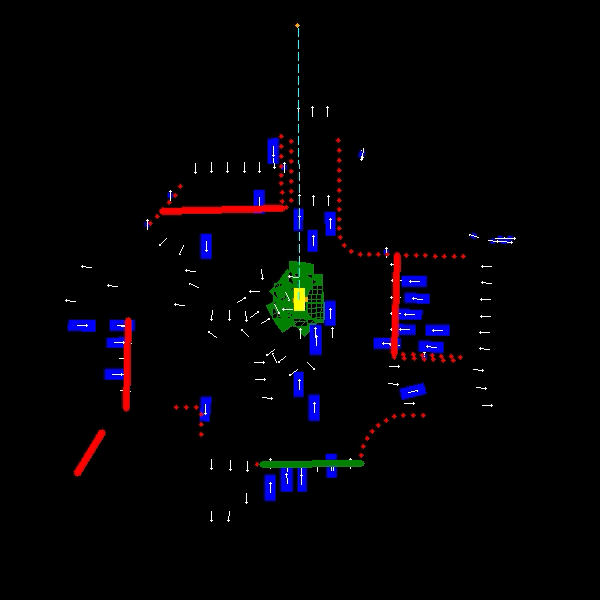

During the tests with BU and Motional, participants wore a lightweight device that featured multiple cameras and sensors on their chest, and a small computer unit located on the back. Using a combination of sensor data and camera images, the computer unit could effectively “see” the volunteers’ surroundings, identify the rideshare vehicle, measure distances, and provide auditory cues.

The devices directed participants to the awaiting Motional vehicle, providing them with “situational awareness,” such as curbs and landscaping along the path. Once inside the robotaxi, the participants could go through the vehicle’s audible automated onboarding process. This process includes introducing riders to three hard keys located beneath the ride information screen. Each key features the raised outline of a distinct shape - a square, a circle, and a triangle - shapes that were finalized based on Motional’s research with disabled persons.

Motional worked with people who are blind or have low vision to design three hard keys with raised shapes located beneath its rider information screen.

“While there are established guidelines for accessible design, there are still knowledge gaps, especially for a novel product like ours, that we can only gain when working directly with impacted populations and learning first-hand about their experiences,” said Linh Pham, a lead product designer with Motional. “For example, some of our concepts for finding the vehicle came from diary studies and observing the interaction between blind riders and human drivers.”

Ohn-Bar said Motional’s involvement in the project was critical as it afforded participants a full start-to-stop experience. “We needed the industry perspective, otherwise it’s hard to know if the system is really practical in the end,” he said.

Making a robotaxi ride more human

One of the BU team’s goals is to create a navigational assistance system that uses artificial intelligence to perform like human mobility guides, which are trained to help guide visually impaired persons with verbal cues.

In developing its robotaxi, Motional is looking to reproduce the experience of riding in a vehicle with a human driver - only safer. The company has taken feedback collected from tens of thousands of test rides to improve details, such as how sharp the vehicle takes a turn. One other feature Motional is developing based on its research with people with disabilities is the ability to ask the vehicle to wait a few extra minutes if they are running late.

As a designer, it’s our role to empathize with the people we’re designing for.”

said Pham. “This empathy comes from observing people in real life and how they interact with their environment, in order to understand the diverse needs and pain points.”

Ohn-Bar said his work is also personal; his grandmother lost much of her sight a few years back.

“She keeps calling me and asking, ‘When can I use your app?’” he said.