While technologically advanced features such as lane assist, blind-spot detection, and emergency braking can make it feel like your vehicle is able to drive itself, there's a big difference between a vehicle that can pull into a parking space unassisted and a vehicle that can travel miles from Point A to Point B without anyone behind the wheel.

In this chapter of the DriverlessEd series, we will help explain the difference between an autonomous vehicle (AV) and a vehicle with advanced driver assistance systems (ADAS). Knowing the difference between the two is important, especially as both types of technology become more common.

ADAS features are driver-friendly, have the potential to assist with certain driving tasks, and even help avoid accidents. However, failure to understand the limitations of ADAS compared to AVs could jeopardize the safety of the driver, passengers, pedestrians, and other nearby vehicles.

Be sure to read Chapter 1: Why Driverless Vehicles?

MATURATION OF ADAS

The original automobiles were revolutionary but also mechanically straightforward. Drivers used a set of pedals and levers to control speed and stopping and a wheel to change direction. Over the decades these mechanics have become increasingly sophisticated as manufacturers introduced consumer-friendly technologies that made driving easier and safer.

Now, features such as power steering, automatic transmissions, cruise control, and anti-lock brakes are standard in most personal vehicles.

Automobile technology continues to evolve. Cameras and radar sensors can alert drivers of other cars or pedestrians in blind spots, or activate the brakes if a vehicle ahead stops short. Cruise control has evolved into adaptive cruise control that, when combined with lane centering technology, can guide a vehicle down the highway while maintaining a safe distance from other cars.

All of these features offer convenience to drivers, and some even help avoid accidents. However — and this is the important part — a car or truck can have all the ADAS features in the world, but it’s still not an autonomous vehicle.

An ADAS-laden vehicle may be able to drive down the highway, navigate stop-and-go traffic, and park itself, but it requires the driver to be ready to take control of the vehicle at any moment. No watching movies, no reading, no backseat naps.

By comparison, AVs are designed to handle every part of the trip, from start to stop, without the help of a human driver. AVs have redundant systems, are significantly more technologically advanced, and use machine learning principles to continuously get smarter with each mile they drive. So that book you want to read? You’ll be able to read it in the back of an AV.

Your car or truck may have the most advanced, sophisticated, helpful, and impressive ADAS features, but it still doesn’t make your vehicle autonomous.

WHEN IS A VEHICLE AUTONOMOUS?

What capabilities are required to earn the coveted designation of “autonomous vehicle”? What if a vehicle can brake, accelerate, and steer on its own — does that make it autonomous?

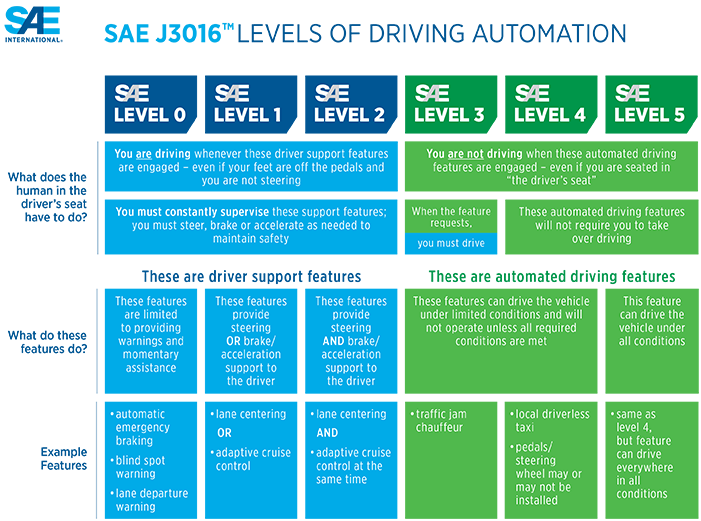

Most AV technology companies, like Motional, use the Levels of Driving Autonomy as published by SAE — a global association of aerospace, commercial vehicle, and automotive engineers — to define their autonomy capabilities. SAE has outlined six distinct autonomy levels and specified the type of capabilities needed to reach each level.

Every consumer vehicle currently on the road — even those with the most advanced ADAS features — requires drivers to remain on-duty, “constantly supervise” the features, and be ready to take back control at any moment.

By comparison, Motional's robotaxis will operate at Level 4 autonomy. That means the vehicle will be able to handle every part of the ride, from start-to-stop, without anyone behind the wheel.

Programming a vehicle to drive for miles down a road, to change lanes safely, and even navigate a traffic jam without the driver intervening is a noteworthy advancement in vehicle technology. But as the driving scenarios become more challenging — for example, encountering road work that requires us to cross over a double yellow lane during rush hour — the technological differences between AVs and vehicles with ADAS become more evident.

Here’s another example: vehicles with ADAS need a human driver to navigate the hustle and bustle of a busy grocery store parking lot. Meanwhile, passengers in an AV can continue writing out their shopping list until they’re dropped off.

THEN HOW DO AVs WORK?

AVs and vehicles with ADAS features both use a combination of hardware — often cameras, radars, and lidars — and software to understand what’s around them.

For example, a radar sensor connected to your vehicle’s on-board computer can alert you if a pedestrian is nearby when you start to back out of a parking space. Or a front-facing camera can help identify travel lanes and prevent your vehicle from drifting.

However, ultimately, in a vehicle with ADAS, the human behind the wheel is still responsible for driving. An AV, however, requires a much more complex combination of hardware, software, and computing power to see, think, and react. Level 4 and 5 autonomous vehicles, such as a robotaxi, need redundancies in hardware, as well as computer modeling based on millions of miles of driving scenarios, to be prepared to safely handle dynamic road scenarios.

Think about how we drive as humans: We use our eyes and ears to see and hear what’s around us. This sensory input is transmitted to our brain and we use our years of driving experience to understand what is happening and predict what the vehicle in front of us may do next, or anticipate whether a pedestrian is about to cross the street. These predictions are turned into commands that our body follows to control our vehicle’s speed and direction and get us where we need to go safely.

This video shows how a human driver sees the road compared to an AV, which has more than 30 cameras, radars, and lidars.

An autonomous vehicle essentially needs to be able to do the same. Motional vehicles, for example, are equipped with more than 30 cameras, lidar, and radar to “see” every vehicle, pedestrian, and object in a 300-meter radius. Microphones also let the AV “hear” sirens from emergency vehicles.

All this information is fed into a high-power onboard computer that uses machine learning-based modeling to understand whether a detected object is a convertible, box truck, pedestrian, cyclist, scooter, or even a plastic bag blowing across the street. This modeling is constantly fine-tuned based on lessons learned from driving millions of miles on public roads and in simulations. The computer then plans a safe route and tells the vehicle how to proceed: whether to turn, slow down, or go faster, for example. And, like with our brains, all of these processes are done within a matter of milliseconds.

One other difference involves redundancies. Vehicles with ADAS are considered to have a single point of failure, meaning if a sensor malfunctions, that capability stops working, and the human driver has to take over. AVs are built with redundancies in place so they can continue to operate if that sensor is damaged. For example, a vehicle with ADAS may have four cameras, but a Motional robotaxi will have 13, allowing it to continue operating.

CONSTANTLY IMPROVING

One big difference between AVs and vehicles with ADAS features is that AVs get smarter with each mile they drive.

No, AVs aren’t sentient. But every piece of information the vehicle processes - every camera frame and lidar scan, every steering wheel movement and brake tap, every vehicle, pedestrian, and object it encounters – is collected as data and uploaded at the end of the day into an enormous cloud-based data repository. We’re talking terabytes of information every day. We then use machine learning-powered systems to quickly and accurately comb through all this information to identify interesting things that happened during the day.

Maybe a truck in an adjacent lane suddenly cut across two lanes of traffic, and in response, our AV had to slam on the brakes. This incident would be tagged and used to train the entire system - every Motional robotaxi in the fleet - how to recognize the signs that a vehicle is about to cut in front.

ADAS systems may receive an occasional update to its hardware and software components, but these typically fix bugs, not unlock new or improved capabilities.

WHAT’S NEXT

ADAS has made it easier, safer, and in some cases, more fun to drive. However, even the most advanced ADAS systems in the consumer marketplace fall short of making the vehicle an AV.

AVs have more cameras, radars, and lidars, significantly more powerful and sophisticated computer systems, and machine learning-powered modeling to safely handle every step of the journey, from door to door.

In Chapter 3, we’ll discuss how scientists and engineers have designed robotaxis to ensure passengers feel comfortable riding in a driverless vehicle.

Have a question about robotaxis, how they work, or how they'll improve transportation in your communities? Tag us at #DriverlessEd on Twitter and Instagram or DM @motionaldrive.