#DriverlessEd aims to teach consumers about autonomous vehicles (AVs), everything from the driverless technology that powers them, to the rigorous safety practices and the rider experience.

Be sure to explore the previous six chapters:

Chapter 1: Why Driverless Vehicles?

Chapter 2: The Difference Between AVs and ADAS

When developing the future of mobility, nothing is more important than safety. For autonomous vehicle (AV) companies, the safety of those driving, walking, or biking near our robotaxis is just as important as the safety of the person inside the vehicle.

Driverless vehicles, such as robotaxis, use a combination of sensors, onboard maps, GPS devices, and microphones to keep see, understand, and track every other vehicle, cyclist, jogger, stop sign, traffic light, intersection, speed bump, construction cone around it.

Unlike human drivers, AVs have full 360 degree vision, allowing it to know what’s happening around it up to 300 meters away, including in the dark. If the AV can see an object in its driving environment, it can create a plan to handle it safely.

KEEPING TRACK

Anyone who’s driven through a busy downtown area knows how difficult it can be to keep track of everything that’s happening around you. There are other vehicles trying to change lanes with little warning. Throngs of pedestrians ready to step off the curb. Cyclists darting across an intersection. Maybe even a light-rail train approaching from behind.

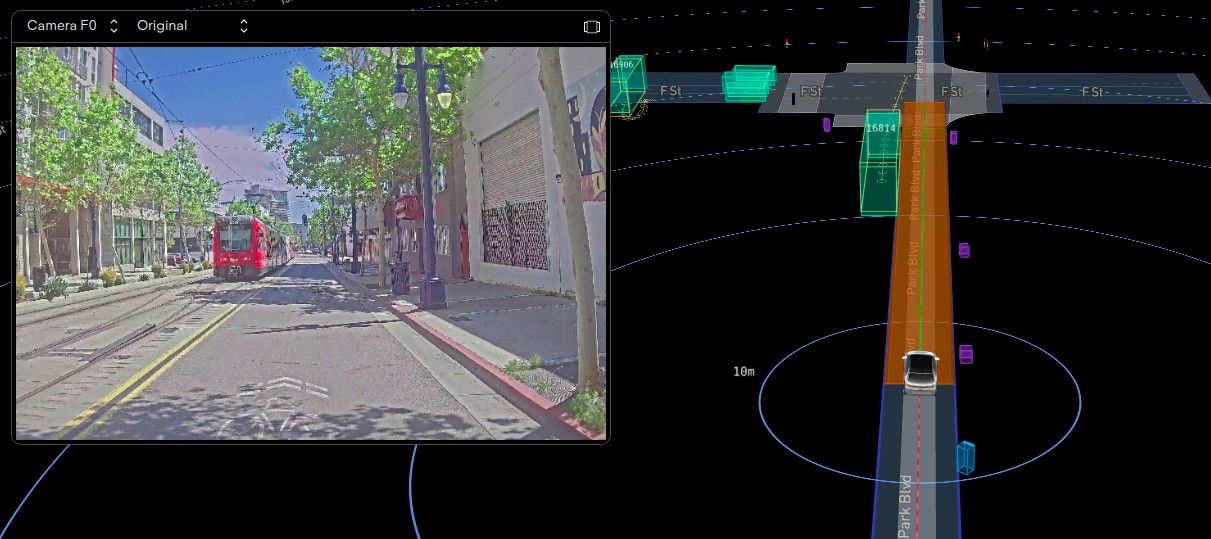

This image shows the Motional AV’s sensors and onboard computer picking up and correctly classifying San Diego’s Trolley while testing.

Some of it is just background noise. Many vehicles in our driving environment are driving in a different direction. Most pedestrians will remain on the sidewalk.

However, driving through an environment like this can be fraught even for an experienced human driver. So just how does a driverless vehicle know what to watch out for and what to ignore?

Driverless vehicles operate safely using advanced technology that helps them see and understand what’s happening around them, and then use that information to plan a safe route forward. This is all handled by three separate but cooperative autonomous driving activities known to engineers as “perception,” prediction,” and “planning.”

“By working together, these three systems provide the AVs onboard computer with a holistic understanding of the driving environment beyond what a human driver is capable of,” said David Schwanky, Motional’s vice president of Compute and Onboard Runtime Environment. “This makes the AV more prepared to respond to unexpected changes in the environment safely.”

PERCEIVING THE ROAD

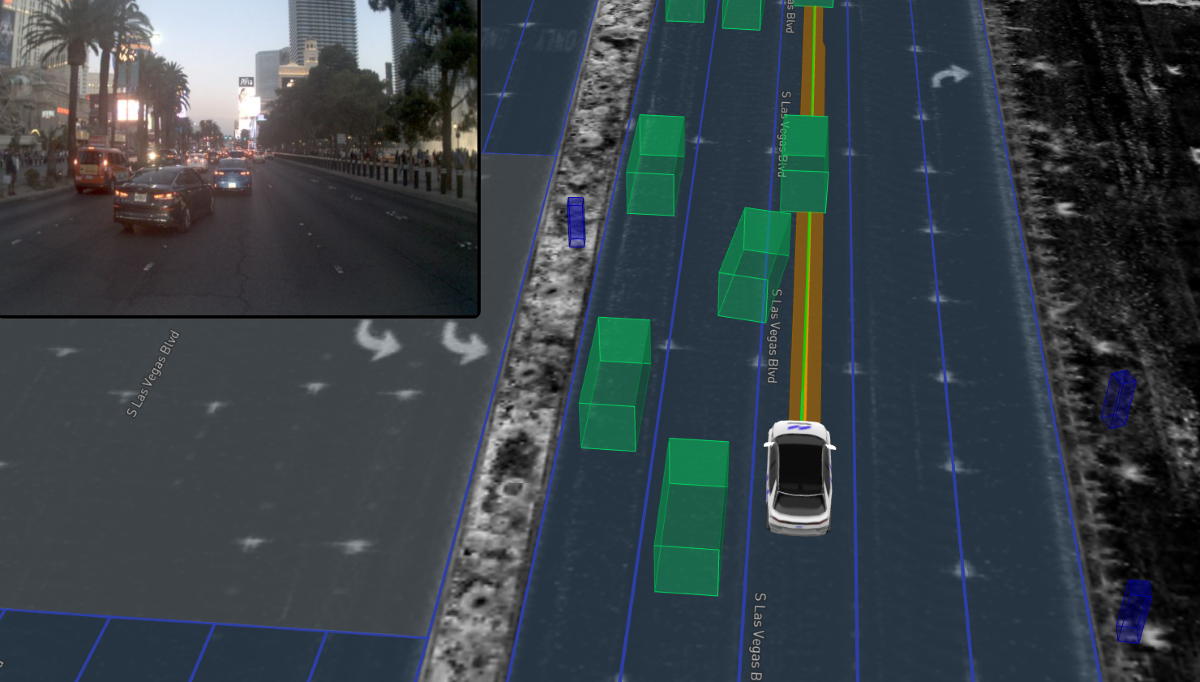

A driverless vehicle’s perception system collects data from its sensor suite (all those cameras, lidars, radars) to identify objects within about 300 meters of the vehicle. It then uses a machine learning-trained algorithm to classify and label those objects – letting the vehicle’s onboard computer know whether it’s something it needs to be aware of – such as a car, or a truck, or a motorcycle, or a small animal, for example – or something it can ignore, like a wall or a tree.

Additional algorithms stitch those objects onto a 3D model of the driving environment that features details such as road lanes, traffic lights, and speed bumps. All of this takes place in a fraction of a second, giving the vehicle real-time situational awareness of what’s happening around it.

That information is then passed down to the vehicle’s prediction system, which tracks every object, determines how fast it’s moving and in what direction, and, like the name suggests, predicts where it’s going to move next based on its past actions.

PREDICTING WHAT’S NEXT

If you’re a pedestrian within 300 meters of a Motional robotaxi, the vehicle can see you, remember where you are, and calculate the chances that you’re going to step off that curb into a driving lane. Or if you’re in a car driving next to a robotaxi, the vehicle’s onboard computer is looking for signs you’re about to change lanes.

Our system looks at the position of every object and reevaluates the predicted path for each object 10 times every second, ensuring the vehicle is always ready to react and plan a safe path forward. But before the tech is ever tested on a public road, it’s first run through a number of simulated virtual scenarios based on real-world incidents. This helps ensure our system is prepared to handle encounters with cyclists, pedestrians, and other vehicles.

PLANNING AHEAD

This part of the technology figures out the best way to navigate the world safely, efficiently, and legally. It chooses which lanes the vehicle should be in, how fast it should go, and when to turn and brake. The onboard computer then double checks the vehicle’s speed and location multiple times every second to make sure it’s executing the planned route perfectly.

We want our vehicles to plan movements that are only safe and legal. So our vehicles are programmed to follow local driving rules – they can’t speed, can’t double-park, have to use blinkers when turning, and have to follow vehicles in front of them at a safe distance. This ensures that we keep not just our own vehicle and passengers safe, but everyone around us.

EXPLAINING SENSORS

What exactly do we mean by sensors? Like the one that automatically opens the door for me at the grocery store?

Sort of. Just much more technologically advanced.

Autonomous vehicles are typically equipped with dozens of sensors that are continuously looking out for pedestrians, cyclists, animals, and other drivers. Motional robtaxis, for example, use an assortment of cameras, lidars, radars. Some of the lidars and radars are used to detect objects really close to the vehicle, and others are looking for objects further down the road. All these sensors are arranged in a way so that our vehicle can “see” what’s happening around it in every direction – from as far as 300 meters away to as close as right next to the side panel.

Cameras use light to capture a digital image. Lidars determine the distance to an object using pulses of lasers. Radar uses electromagnetic waves to determine the location and speed that an object is moving. All three sensors work together to create a 3D picture of everything in the driving environment: pedestrians waiting at a crosswalk, vehicles coming up from behind, traffic cones up ahead. It’s this information that the vehicle’s onboard computer uses to predict movements of objects and plan a safe path forward.

SIREN CALL

Prioritizing safety also means prioritizing the safety of those working to keep us safe – specifically, emergency responders.

Human drivers know to pull over to the side of the road when they hear the siren of a police car, or see the flashing lights of an ambulance so that the emergency vehicle can pass. Driverless vehicles have the technology and training to do the same thing.

Motional robotaxis, for example, will have microphones that can detect sirens from oncoming emergency responders. The driverless vehicle will know how to combine that sound with other sensor data to determine what direction the emergency responder is heading, decide whether it needs to pull over, and identify a safe place to do so.

Motional is also working directly with emergency responders to help them understand how our driverless vehicles work and how they can safely interact with them on the roadway.

KEEP IT CLEAN

As anyone who wears glasses can attest, seeing clearly requires keeping the lenses clean and free of smudges, scratches, and cracks.

The same is true for our sensors. Seeing a family ready to cross the street requires having clean, properly functioning equipment. As explained in Chapter 5, each Motional vehicle receives a multi-point, bumper-to-bumper safety inspection before it rolls out of the garage. This includes making sure each sensor is clean and working properly.

But cars get dirty while driving. The Las Vegas desert can kick up dust storms, for example. Boston’s wintry weather can leave a film of salt on every exterior surface.

Motional has designed the sensors on its robotaxi to be self-cleaning. Some sensors are equipped with air or water jets to dislodge any foreign objects. Others have waterproof coatings to move water off quickly. That way our vehicles can remain on the road and continue safely detecting and navigating objects in its driving path.

LAY OF THE LAND

The more familiar driverless vehicles are with their surroundings, the easier it is to anticipate what’s coming up ahead.

Before deploying a fleet in a new city, companies like Motional currently create detailed maps of the driving area. These maps contain details such as the location of every driving lane, curb cut, speed bump, traffic light, crosswalk, and bridge and overpass. Driverless vehicles are perfectly capable of detecting and understanding these features on their own – but having that background can help reduce confusion.

For example, it would be easy for driverless vehicles to lose track of traffic lights amidst all the bright lights and giant LED screens along the Las Vegas Strip. Or if Pittsburgh gets a coating of snow, driverless vehicles need to still know where the lanes are. By having baseline maps, our vehicles can still operate safely in those types of situations.

WE SEE YOU

Driverless vehicles have the potential to significantly improve roadway safety – not just for robotaxi passengers, but everyone else the vehicles share the road with.

A robotaxi’s sensors and software are fine-tuned to not just see everything around it, but also understand what it’s seeing, predict future movements, and then plan a safe path forward.

So when we see people looking at our vehicles when they’re out on public roads, people should know that our vehicles can see them too.