Technically Speaking: How Continuous Fuzzing Secures Software While Increasing Developer Productivity

Motional’s Technically Speaking series takes a deep dive into how our top team of engineers and scientists are making driverless vehicles a safe, reliable, and accessible reality.

In Part 1, we introduced our approach to machine learning and how our Continuous Learning Framework allows us to train our autonomous vehicles faster. Part 2 discussed how we are building a world-class offline perception system to automatically label data sets. Part 3 announced Motional’s release of nuPlan, the world’s largest public dataset for prediction and planning. Part 4 explains how Motional uses multimodal prediction models to help reduce the unpredictability of human drivers. Part 5 looks at how closed-loop testing will strengthen Motional's planning function, making the ride safer and more comfortable for passengers. Part 6 explains how Motional is using Transformer Neural Networks to improve AV perception performance. In Part 7, Motional cybersecurity engineer Zhen Yu Ding explains how we use continuous fuzzing to save time.

Successful companies must minimize risk. Tabletop exercises are a common risk mitigation technique, where companies gameplay a problematic scenario that stress tests their internal policies and procedures. It allows the company to uncover gaps and weaknesses in a controlled environment versus the public domain.

Developing secure software for a public product has a similar goal. We want to make sure the version used by the public is as safe and secure as possible before deploying it – or if there is a glitch, that the system can handle it gracefully. At Motional, we employ a technique known as fuzz testing, or fuzzing, which stress tests our autonomous vehicle software through the use of randomized, arbitrary, or unexpected inputs.

By throwing curveballs and changeups at our software, we’re able to uncover defects and edge cases that could cause errors or corrupt the software. Motional believes that regular fuzz testing, with non-brittle tests, enhances productivity, and results in more robust software and a safer, more secure AV.

Fuzzing in a Nutshell

Fuzzing exercises software through the use of randomized, arbitrary, or unexpected inputs. For example, the software may expect dates formatted a certain way. Fuzzing finds out what happens if that formatting assumption is broken. It also lets us see the consequences of these errors, which could range from an error message to a full system crash. When we’re designing software that impacts how a vehicle operates, we want to be sure that the system can handle errors gracefully.

We can fuzz an individual function in code, a binary that reads from a file or stream, an API that accesses a service, or a port (physical or logical) on a device, to name a few. For practical examples on how to create fuzz tests, we suggest browsing through Google’s fuzzing tutorials on GitHub.

When stress testing software that’s written in memory-unsafe languages, such as C++, fuzzing is a powerful technique for finding memory corruption vulnerabilities and undefined behavior. Attackers may use such vulnerabilities to trigger software crashes that disrupt service, leak sensitive information, or even take control of the software system. Dynamic analysis tools such as the AddressSanitizer, MemorySanitizer, UndefinedBehaviorSanitizer, and Valgrind memcheck often complement fuzzing as these tools can detect more such vulnerabilities.

We can see evidence of fuzzing’s effectiveness in Google’s OSS-Fuzz project, where Google fuzz tests open source software. OSS-Fuzz has found tens of thousands of defects and security vulnerabilities in open source software [5].

National and international automotive cybersecurity standards, such as ISO 21434 and Singapore’s TR 68, recommend or require fuzz testing because of its ability to test rare edge cases and find defects. Motional’s first-in-the-industry AVCDL also requires fuzz testing.

Continuous Testing in the Face of Software Change

One-off fuzzing is useful for identifying weaknesses in a particular software version. However, due to our Continuous Learning Framework, Motional’s software changes daily, requiring constant testing and verification.

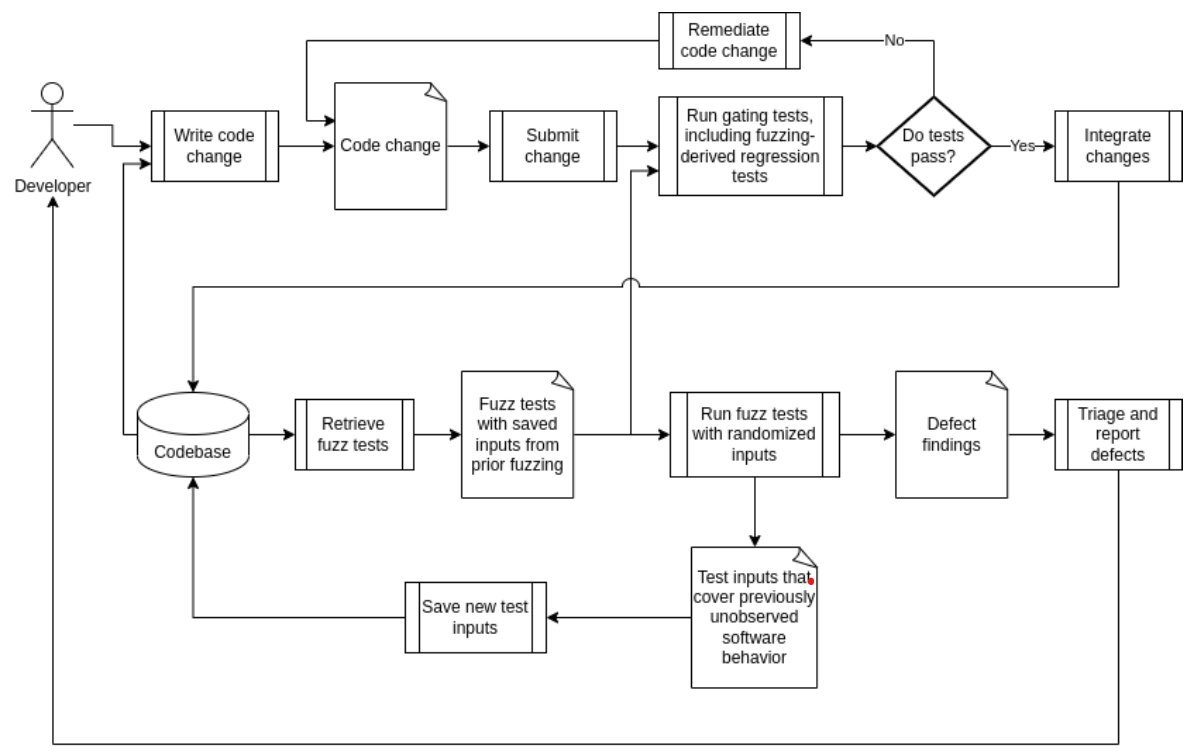

Continuous fuzzing is the fuzzing analogue of continuous testing, where tests are triggered periodically to catch new issues in code, ideally before integrating code changes into production. Continuous testing improves developer productivity by giving rapid feedback on code changes while still being built rather than uncovering issues at the end of a build. The actual frequency of testing is up to the developer. It could happen as soon as a line of code changes; at Motional, we often test on a daily cadence. Continuous fuzzing augments this battery of continuous tests.

The challenge is to set limits when fuzz testing. Unlike other testing techniques that use fixed inputs, fuzz testing, by its nature, requires random inputs from a nearly boundless array of scenarios. It’s simply not possible to test for every scenario; at some point, a test suite must decide whether a code change is acceptable or not within a reasonable amount of time. Recent research found that running fuzz tests for as little as 15 minutes can still be effective at detecting and stopping important defects from reaching production and being deployed [1].

An even faster approach is to repurpose fuzz tests as regression tests that execute on saved inputs generated from prior fuzzing. Many fuzz testing tools preserve inputs that have triggered previously unobserved software behavior. We can preserve a corpus of such inputs and create a new regression test by replaying the inputs on future fuzz tests. This enables fuzz tests to provide developers with rapid feedback on software correctness.

Keeping Fuzz Tests Maintainable

Continuous testing has taught software engineers the importance of keeping a test suite with a low maintenance cost [4]. Maintenance costs, which can include lost time adjusting the tests as well as monetary investments, are a burden on developer productivity. And if continuous testing proves costly, that could jeopardize acceptance of continuous fuzzing, especially if fuzzing is new to developers.

An example of a particularly dangerous maintenance problem are brittle tests.

During a brittle test, software can break even under correctly implemented changes that preserve existing behavior (e.g., refactorings, adding new features). When brittle tests produce false negatives, developers must then pay a maintenance cost to rectify the tests. This diminishes the value of tests as a source of rapid feedback on software correctness. Brittle tests risk increasing developer frustration and reducing their confidence in the value of testing altogether. Thus, avoiding brittleness is important for developer acceptance of continuous fuzzing as a critical step.

Testing-only code is also a potential source of brittleness and reduced developer productivity. While some testing-only code is unavoidable, such code incurs a maintenance cost on development, and testing-only code should provide sufficient value to compensate for its maintenance cost. The practice of using conditional compilation to create a fuzzing-friendly build and creating test doubles for fuzzing produces testing-only code that developers need to maintain in sync with production code. Neglecting to maintain testing-only code can mean that the software under test increasingly diverges from production software, resulting in test results that increasingly do not reflect actual software behavior.

For a deeper discussion of brittle tests and its impact on developer productivity, we recommend the Unit Testing [7] and Test Doubles [8] chapters from Software Engineering at Google.

We may find opportunities for easier or more performant fuzzing that come at the cost of brittleness. As previously mentioned, testing only via a public software interface is preferable to depending on implementation details, as tests that depend on implementation details are liable to break under correct modifying changes. Although a software component’s public interface might not be amenable to fuzzing, the public interface might use private helper functions that are easier to fuzz.

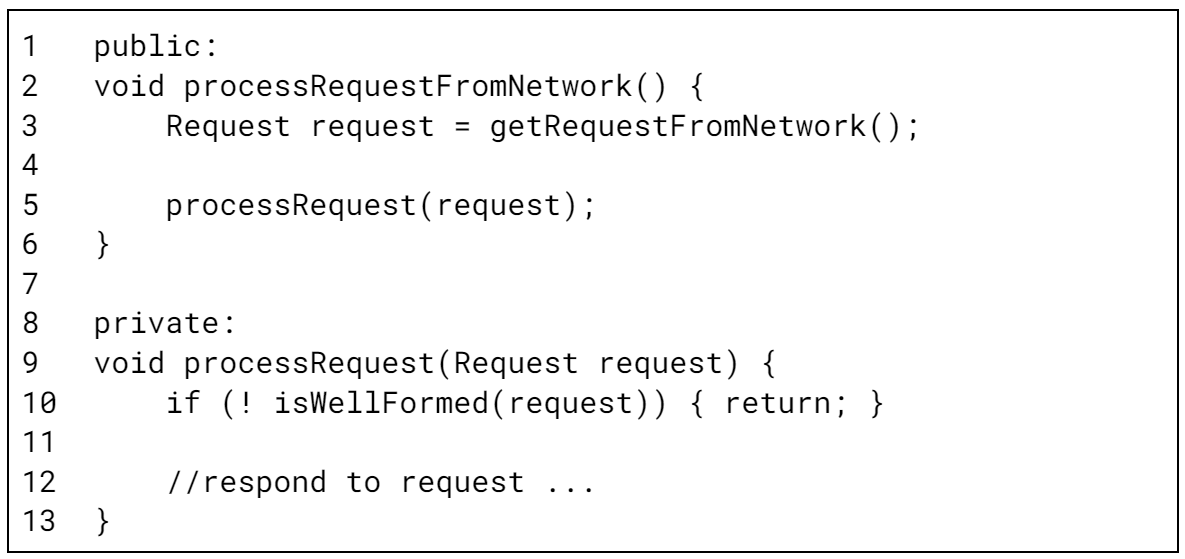

Consider the following example:

The public method processRequestFromNetwork accepts inputs from a network connection. Fuzzing the network connection with a network fuzzer is both more difficult and incurs a performance penalty. The private method processRequest appears to be much more amenable to fuzzing, as a fuzz test can directly pass randomized requests into the function. However, fuzzing the private processRequest makes the fuzz test dependent on the implementation of processRequestFromNetwork. Suppose a future code change refactors the isWellFormed check on line 10 out of processRequest and into line 4 in processRequestFromNetwork. The responsibility of checking the request’s well-formedness moves out of the private helper function and into its callers. As a result, the refactoring also requires modifying the fuzz test targeting processRequest to only pass well-formed packets, creating a maintenance cost for the developer implementing the refactoring. Is faster fuzzing without using a network fuzzer worth the cost of added brittleness of depending on implementation details? We do not prescribe a solution, but we encourage those implementing fuzz tests to consider the tradeoff.

Suppose instead we decide to use a test double to simulate network I/O on line 3. Perhaps a test double already exists for regression testing, but the test double is implemented using a heavyweight mocking framework with features that may be useful for regression testing, but only serve to needlessly consume CPU cycles when fuzzing. We can implement a new, lightweight test double for the fuzz test to simulate network I/O. A new test double, however, creates additional testing-only code that developers need to keep in sync with the actual software the double is mimicking. This creates additional maintenance costs.

If performing a one-off fuzzing campaign, we don't need to worry about maintaining our fuzz tests in the face of future code changes. But when continuously fuzzing, we should consider how the fuzz tests will help or hinder development. We urge those implementing continuous fuzzing to consider the dimension of test maintainability and strive to improve developer productivity.

The more software that’s introduced into a vehicle, especially a vehicle that relies heavily on data collection, the more risk is introduced into the overall system. The risk could come from vulnerable code, faulty sensors, or a cybersecurity attack.

Reducing risk through continuous testing is critical. It’s far better to uncover weaknesses in a controlled environment than through a public incident.

Testing doesn’t have to be a drag on development. When done well, security testing practices such as continuous fuzzing serve as a net gain on developer productivity while making software more safe, secure, and reliable.

Laboni Sarker, a Ph. D. student at the University of California, Santa-Barbara, contributed to this research.

References:

[1] Effectiveness and Scalability of Fuzzing Techniques in CI/CD Pipelines. Klooster, Thijs & Turkmen, Fatih & Broenink, Gerben & Hove, Ruben & Böhme, Marcel. (2022). 10.48550/arXiv.2205.14964. Link

[2] Why Go fuzzing? Dmitry Vyukov, Romain Baugue Link

[3] Why (Continuous) Fuzzing? Yevgeny Pats Link

[4] The Importance of Maintainability, Software Engineering at Google,, Link

[5] https://bugs.chromium.org/p/oss-fuzz/issues/list

[6] https://github.com/google/fuzzing

[7] Unit Testing, Software Engineering at Google, Link

[8] Test Doubles, Software Engineering at Google, Link