The ability to reason accurately about a surrounding driving scene and anticipate the behavior of other road users is a vital task required for safe and effective autonomous driving. Experienced human drivers learn to predict that a neighboring vehicle with a turn signal will likely change lanes, or that a pedestrian walking at the edge of a road may be about to jaywalk.

To achieve fully autonomous driving, AV systems must be equipped with a "world model" that reasons how road users are likely to behave given observed motion states, surrounding road geometry, and vital contextual information such as turn signals, traffic lights and road signs.

A typical road interaction requiring strong prediction reasoning. Correctly anticipating another vehicle to drive into our lane allows us to safely slow down.

Behavior Prediction is a task best handled by AI, and directly supports ML-based planning

The astounding rate of progress in artificial intelligence in recent years has resulted in the development of neural networks capable of performing behavioral modeling tasks. Leading AV companies, including Motional, have developed sophisticated transformer or diffusion-based models capable of processing vast amounts of online perception and map data to generate multimodal behavioral predictions for cyclists, pedestrians, and road vehicles [1][2][3].

Trajectories of surrounding road users from these behavior prediction models originally served as an input to a separate rule-based planning stack. However, with the rise of end-to-end planning approaches, these behavior reasoning models now increasingly are extended to generate strong possible trajectories for the autonomous vehicle itself.

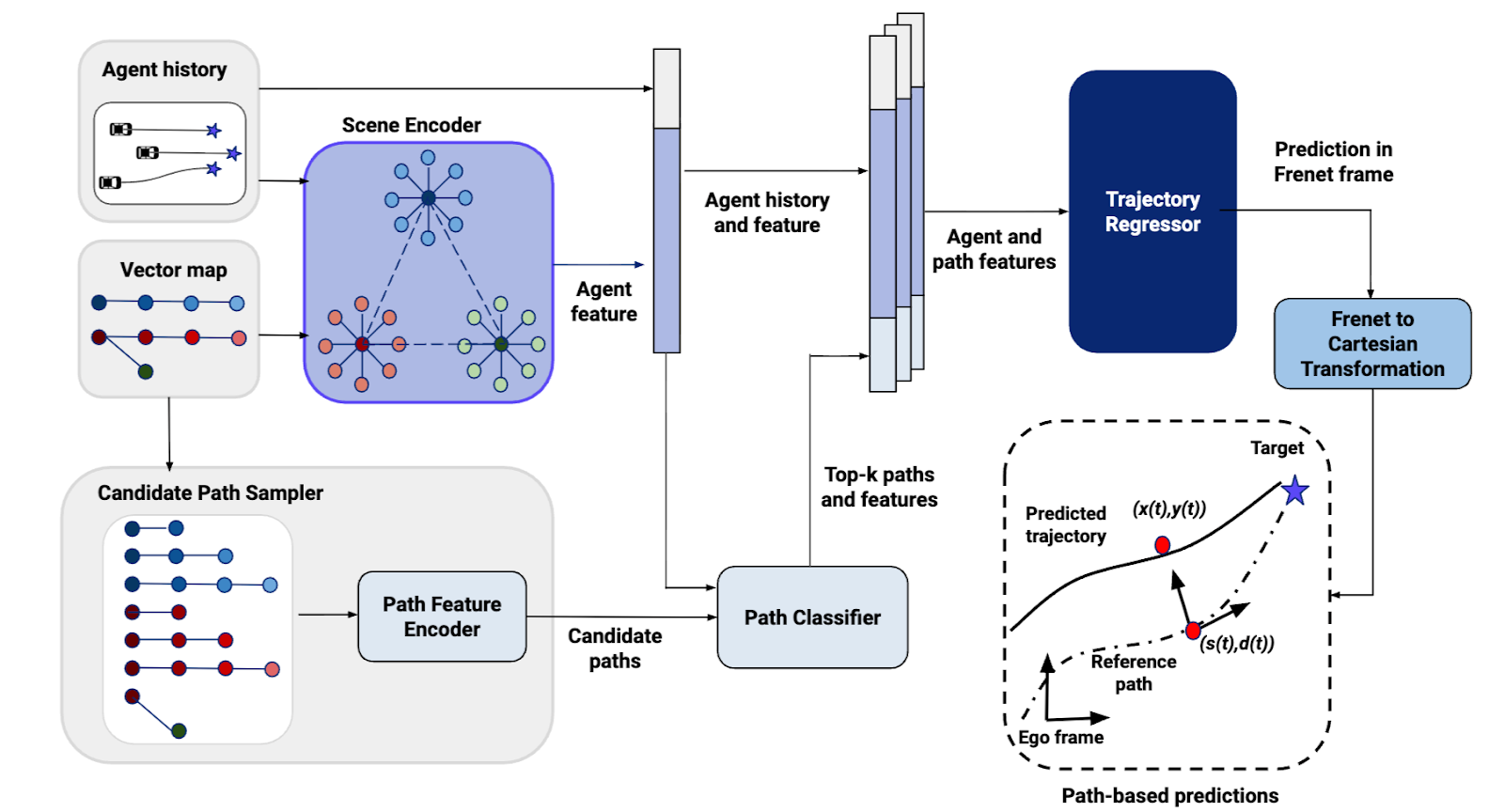

Many published architectures exist for deep-learning based motion forecasting. This is just one example from a previously published Motional paper.

Motional has achieved tremendous on-road performance improvements from scaling up our core behavior prediction networks

Scaling up Motional's behavior prediction network has been a major part of our vision to develop Large Driving Models that support globally scalable autonomous driving. In the last year, we've focused on scaling up our on-board motion forecasting models through parallel investments in both training data expansion and model capacity expansion.

Dataset scaling requires a focus on both quantity and quality

Modern transformer-based AI models are notoriously data-hungry, and behavior prediction models for self-driving cars are no exception. Motional's fleet of AVs collect tens of thousands of miles of data every month, allowing us to form large, diverse datasets comprising hundreds of millions of driving samples for our network to learn from. Over the last year, we've expanded the size of our production training dataset by a factor of 40.

While our models benefited significantly from a pure increase in dataset size, we also needed to make sure that the additional training data covered a sufficient diversity of interesting scenarios. Published academic literature related to behavior forecasting often focuses on aggregate metrics from large open source datasets such as Motional’s nuScenes or Argoverse. However, we have found that improving aggregate metrics does not always correlate to improvements in end-to-end on-road behavior.

To ensure we have sufficient dataset quality as well as quantity, Motional goes the extra mile to curate custom internal datasets tailored around critical driving situations such as:

- Jaywalking pedestrians on busy streets

- Large buses and trucks cutting into our lane

- Scooters and e-bikes going from sidewalks to the road

- Vehicles performing aggressive maneuvers at intersections

- Pedestrians getting in and out of cars

Fortunately, we are able to leverage the strong data mining infrastructure developed at Motional over the last several years, including the recent development of offline ML-based scenario mining models such as Omnitag.

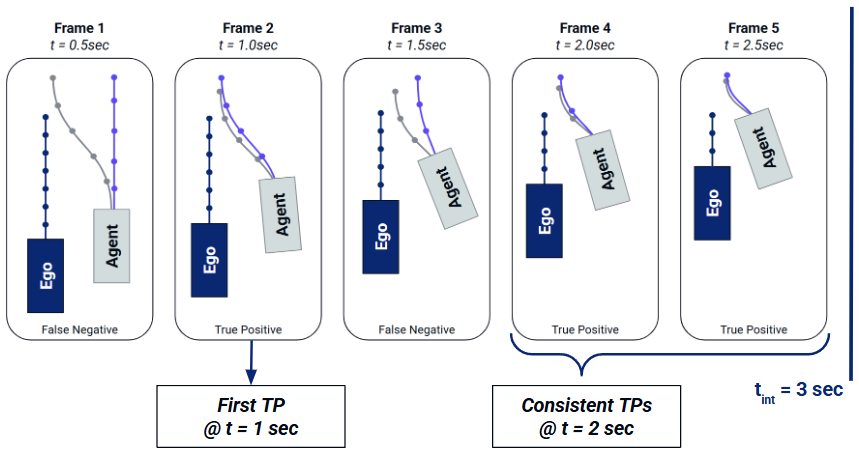

We have also found that it's not sufficient to release new models based only on improvements to standard published metrics such as Average Displacement Error. At Motional, our ML teams have been hard at work developing a comprehensive suite of advanced model evaluation metrics. These advanced metrics can look temporally over several consecutive seconds of inference results to ensure our forecasting models accurately anticipate fundamental interactions such as vehicle cut-ins and pedestrian jaywalker crossings.

Example of an advanced temporal metric developed at Motional. The metric is able to determine how early we are able to anticipate a "true positive" vehicle cut-in by looking at several consecutive inference results.

Amazingly, we found that intelligently scaling up the size and improving the quality of our custom datasets led to a nearly 50% improvement in our on-road comfort metrics, measured both by human subjective feedback and our quantitative comfort assessment systems.

Example of improved reasoning from dataset scaling. (Left) A model with a smaller dataset shows a "false positive" vehicle cut-in prediction, which can cause an AV to make an uncomfortable brake tap. (Right) Scaling the dataset and adding more curated training samples creates significantly improved predictions.

Increasing model capacity has significant benefits, but care must be taken to avoid physically unrealistic predictions

Recently published literature [4][5] has shown compelling evidence of how behavioral models scale with a combined increase in both the number of training samples and the number of trainable model parameters. At Motional, our recent experience scaling our internal prediction model also matches these findings.

We have recently completed an effort to scale up our on-board model capacity by a factor of 5, which required several important engineering optimizations to ensure that we stay within our latency and compute budget.

Early results from our capacity scaling effort indicate we will see a 30-50% boost in our on-road comfort metrics, similar to our dataset expansion efforts.

However, an increase in model capacity is not without risks. Similar to large language model hallucinations, we've found that increasing on-board model capacity can increase the risk of behavior models generating unrealistic predictions - for example, predicting a vehicle to parallel park when it is actually just decelerating for a stop sign.

To mitigate these risks and safely deploy larger models, it's necessary to:

- Ensure behavior models are trained with a network and loss function design that encourages physically realistic predictions

- Carefully monitor outlier training data that a larger capacity model might overfit to.

- Have a strong model evaluation system in place to prevent candidate models with a high rate of unrealistic predictions from reaching deployment

Example of improved behavior forecasting from model capacity scaling. A smaller sized model (left) is more likely to incorrectly predict vehicles to cross in front of our AV at intersections, while a larger model (right) has significant improvements in intersection reasoning.

The Future of Behavior Models

AV systems are rapidly consolidating from many small models performing specific tasks to several Large Driving Models capable of high-level reasoning. As a result, we are leveraging our large behavior models not just for predicting the behavior of other agents but to also help directly reason about the next actions our AVs should execute.

Additionally, we can leverage our rapidly evolving vision capabilities to provide additional visual context to our behavior models, allowing us to reason about gestures like a pedestrian looking at their cellphone or authority figures navigating traffic.

If these sorts of challenging behavioral modeling opportunities excite you, we are hiring!